Test validity: Difference between revisions

(LinkTitles) |

m (Text cleaning) |

||

| (4 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

'''Test validity''' - an indicator of the extent to which a test measures what it is supposed to measure, as defined by E. Carmines and R. Zeller <ref> Carmines E., Zeller R., 1979 </ref>. Validity simply tells you how accurate a test is for your field of focus. | '''Test validity''' - an indicator of the extent to which a test measures what it is supposed to measure, as defined by E. Carmines and R. Zeller <ref> Carmines E., Zeller R., 1979 </ref>. Validity simply tells you how accurate a test is for your field of focus. | ||

A test's validity is established in reference to a specific purpose and specific groups called reference groups. Test developers must determine if their test can be used appropriately with the particular type of people - [[target group]] - you [[plan]] to test. And, most importantly, the test should measure what it claims to measure, not some other characteristics <ref> U.S. Department of Labor [[Employment]] and [[Training]] Administration, 1999 </ref>. In other words, the purpose of testing and the use of the [[information]] gathered must always be taken into account. On a test with high validity, tested fields or competencies will be closely linked to the test's intended purpose. With this, the higher the test's validity is, the outcome and information gathered from the assessment will be more relevant to its purpose. | A test's validity is established in reference to a specific purpose and specific groups called reference groups. Test developers must determine if their test can be used appropriately with the particular type of people - [[target group]] - you [[plan]] to test. And, most importantly, the test should measure what it claims to measure, not some other characteristics <ref> U.S. Department of Labor [[Employment]] and [[Training]] Administration, 1999 </ref>. In other words, the purpose of testing and the use of the [[information]] gathered must always be taken into account. On a test with high validity, tested fields or competencies will be closely linked to the test's intended purpose. With this, the higher the test's validity is, the outcome and information gathered from the assessment will be more relevant to its purpose. | ||

== Types of validity - methods for conducting validation studies == | ==Types of validity - methods for conducting validation studies== | ||

As discussed by E. Carmines and A. Zeller, traditionally there are three main types of validity: | As discussed by E. Carmines and A. Zeller, traditionally there are three main types of validity: | ||

* '''Criterion-related validity''' or '''Instrumental validity''' (concurrent and predictive) - calculates the correlation between your measurement and an established [[standard]] of comparison | * '''Criterion-related validity''' or '''Instrumental validity''' (concurrent and predictive) - calculates the correlation between your measurement and an established [[standard]] of comparison | ||

| Line 29: | Line 15: | ||

===Validity measuring=== | ===Validity measuring=== | ||

The method of measuring [[content validity]] was developed by Lawshe C. in 1975 as a way for deciding on agreement among raters that determine how essential a particular item is. Each of the experts has to respond to the following question for each item: "Is the skill or [[knowledge]] measured by this item 'essential,' 'useful, but not essential,' or 'not necessary' to the performance of the construct?". Based on these studies, Lawshe developed the content validity ratio formula: | The method of measuring [[content validity]] was developed by Lawshe C. in 1975 as a way for deciding on agreement among raters that determine how essential a particular item is. Each of the experts has to respond to the following question for each item: "Is the skill or [[knowledge]] measured by this item 'essential,' 'useful, but not essential,' or 'not necessary' to the performance of the construct?". Based on these studies, Lawshe developed the content validity ratio formula: | ||

<math>CVR=\frac{(n_{e}-N/2)}{(N/2)}</math> where <math> n_e </math> = number of SMEs indicating "essential", <math> N </math> = total number of SMEs and <math> CRV \in <+1; -1> </math> | <math>CVR=\frac{(n_{e}-N/2)}{(N/2)}</math> where <math> n_e </math> = number of SMEs indicating "essential", <math> N </math> = total number of SMEs and <math> CRV \in <+1; - 1> </math> | ||

Positive values indicate that at least half of the experts rated the item as essential, so that item has some content validity. The larger number of panelists agree that a particular item is essential, the greater level of content validity that item has <ref> Lawshe C., 1975 </ref>. | Positive values indicate that at least half of the experts rated the item as essential, so that item has some content validity. The larger number of panelists agree that a particular item is essential, the greater level of content validity that item has <ref> Lawshe C., 1975 </ref>. | ||

== Validity and [[reliability]] of tests== | ==Validity and [[reliability]] of tests== | ||

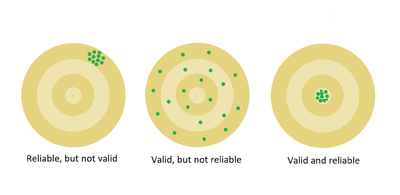

[[File:test validity.png|400px|thumb|Fig. 1 Visual representation of reliability and validity]] | [[File:test validity.png|400px|thumb|Fig. 1 Visual representation of reliability and validity]] | ||

Both concepts of test theory are in use to evaluate the accuracy of a test and allow to determine if the way the test measures something is sufficient enough. They are closely related, but refer to different terms. Reliability is about the consistency for repeated measurements - it refers to the reproduction of measures, while validity refers to their accuracy. | Both concepts of test theory are in use to evaluate the accuracy of a test and allow to determine if the way the test measures something is sufficient enough. They are closely related, but refer to different terms. Reliability is about the consistency for repeated measurements - it refers to the reproduction of measures, while validity refers to their accuracy. | ||

| Line 39: | Line 25: | ||

A valid test should be reliable, but a reliable one is not necessarily valid, as reproducible results may not be correct. | A valid test should be reliable, but a reliable one is not necessarily valid, as reproducible results may not be correct. | ||

== Footnotes == | ==Examples of Test validity== | ||

* '''Content Validity''': Content validity is the extent to which the content of a test accurately reflects the knowledge or skills being measured. For example, a mathematics test may have questions that are relevant to the curriculum, and the content of the test is considered valid if it accurately reflects the topics that should be covered in the course. | |||

* '''Criterion-Related Validity''': Criterion-related validity is the extent to which a test accurately predicts a certain outcome. For example, a test designed to measure a student’s proficiency in reading may be given to a group of students, and then their performance on the test may be compared to their actual performance in a reading class. If the test accurately predicts the student’s performance in the class, then the test is considered valid. | |||

* '''Construct Validity''': Construct validity is the extent to which a test measures what it is supposed to measure. For example, a test designed to measure intelligence may be given to a group of people and then the results may be compared to their actual IQs. If the test results match the actual IQs, then the test is considered valid. | |||

==Advantages of Test validity== | |||

Test validity provides a number of distinct advantages. It allows researchers to determine the extent to which a test is measuring what it is supposed to measure, and thus helps ensure that their results are accurate. Additionally, it allows for a more reliable comparison of results between different studies, as validity can be established across multiple contexts. Additionally, it allows for improved accuracy when interpreting the results of a study, as the validity of the test can be established before data collection. Finally, the use of test validity can also help reduce the potential for bias in the results of a study, as it helps to ensure that the test is actually measuring what it is designed to measure. | |||

==Limitations of Test validity== | |||

Test validity has its limitations, which can make it challenging to accurately measure the performance of a test. These limitations include: | |||

* Poorly constructed tests, which may have inaccurate questions or lack sufficient detail to measure the desired performance. | |||

* Low reliability, which is the extent to which a test consistently measures the same performance over time. | |||

* Inconsistent scoring of test results, which can lead to inaccurate assessments of performance. | |||

* Cultural and language bias, which can lead to results that are not representative of the group as a whole. | |||

* Validity issues that arise from changes in the [[environment]] or context in which the test is administered, such as a shift in the population being tested. | |||

==Other approaches related to Test validity== | |||

Test validity, as defined by E. Carmines and R. Zeller, is an indicator of the extent to which a test measures what it is supposed to measure. Other approaches related to test validity include: | |||

* Content Validity - this is a measure of the extent to which the test items adequately represent all aspects of the domain the test is intended to measure. | |||

* Criterion Validity - this is a measure of the extent to which scores on the test predict scores on some other measure of the same domain. | |||

* Construct Validity - this is a measure of the extent to which the test measures a theoretical construct. | |||

* Concurrent Validity - this is a measure of the extent to which scores on the test correlate with scores on other tests of the same domain that are administered at the same time. | |||

In summary, test validity is an indicator of the extent to which a test measures what it is supposed to measure. Other approaches related to test validity include content validity, criterion validity, construct validity, and concurrent validity. | |||

==Footnotes== | |||

<references /> | <references /> | ||

== References == | {{infobox5|list1={{i5link|a=[[Cronbach Alpha]]}} — {{i5link|a=[[Experimental error]]}} — {{i5link|a=[[Types of indicators]]}} — {{i5link|a=[[Attribute control chart]]}} — {{i5link|a=[[Adjusted mean]]}} — {{i5link|a=[[Interval scale]]}} — {{i5link|a=[[Evaluation criteria]]}} — {{i5link|a=[[Central tendency error]]}} — {{i5link|a=[[Analysis of preferences]]}} }} | ||

==References== | |||

* Carmines E. G., Zeller R. A. (1979), [https://books.google.pl/books?id=o5x1AwAAQBAJ&printsec=frontcover&dq=Reliability+and+Validity+Assessment&hl=en&sa=X&ved=0ahUKEwi1r-vM0fvlAhXMxIsKHdL5DxQQ6AEIKTAA#v=onepage&q&f=false ''Reliability and Validity Assessment''], SAGE Publications, Beverly Hills, California | * Carmines E. G., Zeller R. A. (1979), [https://books.google.pl/books?id=o5x1AwAAQBAJ&printsec=frontcover&dq=Reliability+and+Validity+Assessment&hl=en&sa=X&ved=0ahUKEwi1r-vM0fvlAhXMxIsKHdL5DxQQ6AEIKTAA#v=onepage&q&f=false ''Reliability and Validity Assessment''], SAGE Publications, Beverly Hills, California | ||

* Crocker L., Algina J. (1986), ''Introduction to classical and modern test theory'' Holt, Rinehart ans Winston, Orlando, Florida | * Crocker L., Algina J. (1986), ''Introduction to classical and modern test theory'' Holt, Rinehart ans Winston, Orlando, Florida | ||

Latest revision as of 05:50, 18 November 2023

Test validity - an indicator of the extent to which a test measures what it is supposed to measure, as defined by E. Carmines and R. Zeller [1]. Validity simply tells you how accurate a test is for your field of focus.

A test's validity is established in reference to a specific purpose and specific groups called reference groups. Test developers must determine if their test can be used appropriately with the particular type of people - target group - you plan to test. And, most importantly, the test should measure what it claims to measure, not some other characteristics [2]. In other words, the purpose of testing and the use of the information gathered must always be taken into account. On a test with high validity, tested fields or competencies will be closely linked to the test's intended purpose. With this, the higher the test's validity is, the outcome and information gathered from the assessment will be more relevant to its purpose.

Types of validity - methods for conducting validation studies

As discussed by E. Carmines and A. Zeller, traditionally there are three main types of validity:

- Criterion-related validity or Instrumental validity (concurrent and predictive) - calculates the correlation between your measurement and an established standard of comparison

- Content-related validity or Logical validity - checks whether an assessment is the right representation of all aspects to be measured

- Construct-related validity - ensures that the method of measurement relates to the construct you want to test

Another type of validity different sources relate to is face-related validity. That concept refers to the extend to which an assessment appears to measure what it is supposed to measure [3].

These types tend to overlap - depending on the circumstances, one or more may be applicable.

Validity measuring

The method of measuring content validity was developed by Lawshe C. in 1975 as a way for deciding on agreement among raters that determine how essential a particular item is. Each of the experts has to respond to the following question for each item: "Is the skill or knowledge measured by this item 'essential,' 'useful, but not essential,' or 'not necessary' to the performance of the construct?". Based on these studies, Lawshe developed the content validity ratio formula: where = number of SMEs indicating "essential", = total number of SMEs and

Positive values indicate that at least half of the experts rated the item as essential, so that item has some content validity. The larger number of panelists agree that a particular item is essential, the greater level of content validity that item has [4].

Validity and reliability of tests

Both concepts of test theory are in use to evaluate the accuracy of a test and allow to determine if the way the test measures something is sufficient enough. They are closely related, but refer to different terms. Reliability is about the consistency for repeated measurements - it refers to the reproduction of measures, while validity refers to their accuracy.

A valid test should be reliable, but a reliable one is not necessarily valid, as reproducible results may not be correct.

Examples of Test validity

- Content Validity: Content validity is the extent to which the content of a test accurately reflects the knowledge or skills being measured. For example, a mathematics test may have questions that are relevant to the curriculum, and the content of the test is considered valid if it accurately reflects the topics that should be covered in the course.

- Criterion-Related Validity: Criterion-related validity is the extent to which a test accurately predicts a certain outcome. For example, a test designed to measure a student’s proficiency in reading may be given to a group of students, and then their performance on the test may be compared to their actual performance in a reading class. If the test accurately predicts the student’s performance in the class, then the test is considered valid.

- Construct Validity: Construct validity is the extent to which a test measures what it is supposed to measure. For example, a test designed to measure intelligence may be given to a group of people and then the results may be compared to their actual IQs. If the test results match the actual IQs, then the test is considered valid.

Advantages of Test validity

Test validity provides a number of distinct advantages. It allows researchers to determine the extent to which a test is measuring what it is supposed to measure, and thus helps ensure that their results are accurate. Additionally, it allows for a more reliable comparison of results between different studies, as validity can be established across multiple contexts. Additionally, it allows for improved accuracy when interpreting the results of a study, as the validity of the test can be established before data collection. Finally, the use of test validity can also help reduce the potential for bias in the results of a study, as it helps to ensure that the test is actually measuring what it is designed to measure.

Limitations of Test validity

Test validity has its limitations, which can make it challenging to accurately measure the performance of a test. These limitations include:

- Poorly constructed tests, which may have inaccurate questions or lack sufficient detail to measure the desired performance.

- Low reliability, which is the extent to which a test consistently measures the same performance over time.

- Inconsistent scoring of test results, which can lead to inaccurate assessments of performance.

- Cultural and language bias, which can lead to results that are not representative of the group as a whole.

- Validity issues that arise from changes in the environment or context in which the test is administered, such as a shift in the population being tested.

Test validity, as defined by E. Carmines and R. Zeller, is an indicator of the extent to which a test measures what it is supposed to measure. Other approaches related to test validity include:

- Content Validity - this is a measure of the extent to which the test items adequately represent all aspects of the domain the test is intended to measure.

- Criterion Validity - this is a measure of the extent to which scores on the test predict scores on some other measure of the same domain.

- Construct Validity - this is a measure of the extent to which the test measures a theoretical construct.

- Concurrent Validity - this is a measure of the extent to which scores on the test correlate with scores on other tests of the same domain that are administered at the same time.

In summary, test validity is an indicator of the extent to which a test measures what it is supposed to measure. Other approaches related to test validity include content validity, criterion validity, construct validity, and concurrent validity.

Footnotes

| Test validity — recommended articles |

| Cronbach Alpha — Experimental error — Types of indicators — Attribute control chart — Adjusted mean — Interval scale — Evaluation criteria — Central tendency error — Analysis of preferences |

References

- Carmines E. G., Zeller R. A. (1979), Reliability and Validity Assessment, SAGE Publications, Beverly Hills, California

- Crocker L., Algina J. (1986), Introduction to classical and modern test theory Holt, Rinehart ans Winston, Orlando, Florida

- English F. W. (eds.) (2006), Encyclopedia of Educational Leadership and Administration, SAGE Publications, Thousand Oaks, California

- Lawshe C.H. (1975), Personnel Psychology. A quantitative approach to content validity Personnel Psychology, Bowling Green State University

- Newton P., Shaw S. (2014), Validity in Educational and Psychological Assessment, SAGE Publications, United Kingdom

- U.S. Department of Labor Employment and Training Administration (1999), Testing and Assesment: an Employer's Guide To Good Practices, U.S. Department of Labor Employment and Training Administration

- Wainer H., Braun H. I. (eds.) (2013) Test Validity Routledge, London

Author: Anna Strzelecka